1. Embracing Randomness

Creating an AI product begins with understanding the essential tasks involved. A primary focus is controlling the randomness of a large language model (LLM). Randomness, a double-edged sword, can either enhance the model’s generalization ability or lead to hallucinations. In creative contexts, where precision is less critical, randomness fuels imaginative outputs. Many users are initially captivated by the generative capabilities of large models. However, in domains requiring strict logic, such as factual data and mathematical code, precision is paramount, and hallucinations are more prevalent.

Early optimizations in mathematics and coding involved integrating external tools (e.g., Wolfram Alpha) and coding environments (e.g., Code Interpreter). Subsequent steps included intensive training and workflow enhancements, enabling the model to solve complex mathematical problems and generate practical code of substantial length.

Regarding objective facts, large models can store some information within their parameters, yet predicting which knowledge points are well-integrated is challenging. Excessive emphasis on training accuracy often leads to overfitting, reducing the model’s generalization capability. Training data inevitably has a cutoff date, limiting the model’s ability to predict future events. Consequently, enhancing generative results with external knowledge bases has gained significant traction.

2. Challenges and Prospects of RAG

2.1 What is RAG?

Retrieval Augmented Generation (RAG), proposed as early as 2020, has seen wider application with the recent development of LLM products. Mainstream RAG processes do not modify the model’s parameters but enhance input to improve output quality. Using a simple formula y = f(x) : f is the large model, a parameter-rich (random nonlinear) function; x is the input; y is the output. RAG focuses on optimizing x to enhance y .

RAG’s cost-efficiency and modularity, allowing model interchangeability, are significant advantages. By retrieving relevant content for the model rather than providing full texts, RAG reduces token usage, lowering costs and response times.

2.2 Long Context Models

Recent models supporting long contexts can process lengthy texts, overcoming the early limitation of short inputs. This development has led some to speculate that RAG may become less relevant. However, in needle-in-a-haystack tests (finding specific content within a long text), long-context models perform better than earlier versions but still struggle with mid-text content. Moreover, current tests are single-threaded, and the complexity of multi-threaded tests remains a challenge. Third-party testers have also raised concerns about potential training biases in some models.

While improvements in long-context models are ongoing, their high training and usage costs remain barriers. Compared to inputting entire texts, RAG maintains advantages in cost and efficiency. Nevertheless, RAG must evolve with model advancements, particularly regarding text processing granularity, to avoid over-processing that diminishes overall efficiency. Product development always involves balancing cost, timeliness, and quality.

2.3 The Vision for RAG

Moving beyond early RAG applications reliant on vector storage and retrieval, a systematic approach to RAG framework construction is necessary. Content, retrieval methods, and generators (LLMs) are the fundamental elements of a RAG system.

Optimizing retrievers has been extensively explored due to their convenience. Beyond vector databases, other data structures like key-value, relational, or graph databases should be considered based on data structure. Adjusting retrievers accordingly can further enhance retrieval aggregation.

LLMs can play a crucial role in content preprocessing. Rather than simple slicing, vectorization, and retrieval, using classification models and LLMs for summarization and reconstruction can store content in more LLM-friendly formats. This approach relies more on semantics than vector distances, significantly improving generation quality. The TypoX AI team has validated this method in product implementation.

Optimizing multiple elements simultaneously, especially integrating retrieval with generation model training, represents a significant direction for RAG. This integration can enhance both retrieval and generation quality. Some argue that advancements in LLM capabilities and lower-cost fine-tuning will render RAG obsolete. However, enhancing f (the LLM’s parameters) and x (the input) are complementary strategies. Advanced RAG also involves model fine-tuning for specific knowledge bases, extending beyond input enhancement. RAG’s value lies in superior generative results compared to raw LLMs, better timeliness and efficiency than fine-tuned models, and lower configuration costs.

3. Human and AI Interaction

3.1 Positioning of Large Models

The author opposes the notion that large models alone can meet all user needs. While powerful, large models alone do not suffice to create fully functional agents. Custom-trained or fine-tuned smaller models showcase a team’s capability but may not always enhance user experience. Investing resources in other elements (tools, knowledge bases, workflows) may yield better results. In the Web3 industry, lacking proprietary training data and evaluation standards, the focus should be on developing industry-specific databases and standards, not merely ranking universal (small) models.

Underestimating large models is also a mistake. Early TypoX AI product exploration involved low trust in models, leading to overdeveloped processes. Balancing hard logic with LLM involvement, we achieved an optimal balance, addressing issues like increased costs and slower response times due to quality assurance measures (e.g., LLM self-reflection).

3.2 The Value of Humans

Advancements in AI capabilities highlight human value more concretely. In human-computer interactions, humans remain the most efficient agents, knowing their needs best. AI can self-reflect, but human decision-making aligns more closely with user requirements. Prioritizing accuracy over response time may not always be wise, as some decisions are better made by users, who should retain primary decision-making authority.

Ignoring human presence and overemphasizing model performance neglects user experience and the efficiency of human-computer collaboration. Transforming product users into co-creators aligns with the decentralized spirit of the crypto community. From preferences for knowledge bases and tools to prompt and workflow exploration, and the corpus for model training and fine-tuning, all components of DAgent rely on community participation. The TypoX AI team has initiated mechanisms for user collaboration, gradually opening up to the community, ensuring every TypoX AI user, regardless of hardware limitations, can participate in the DAgent ecosystem construction.

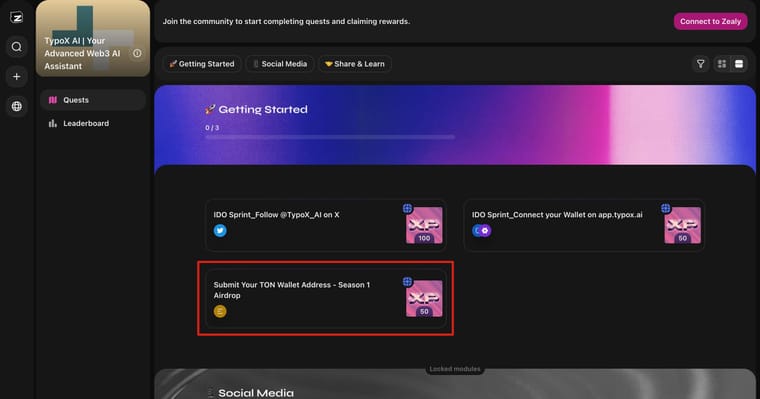

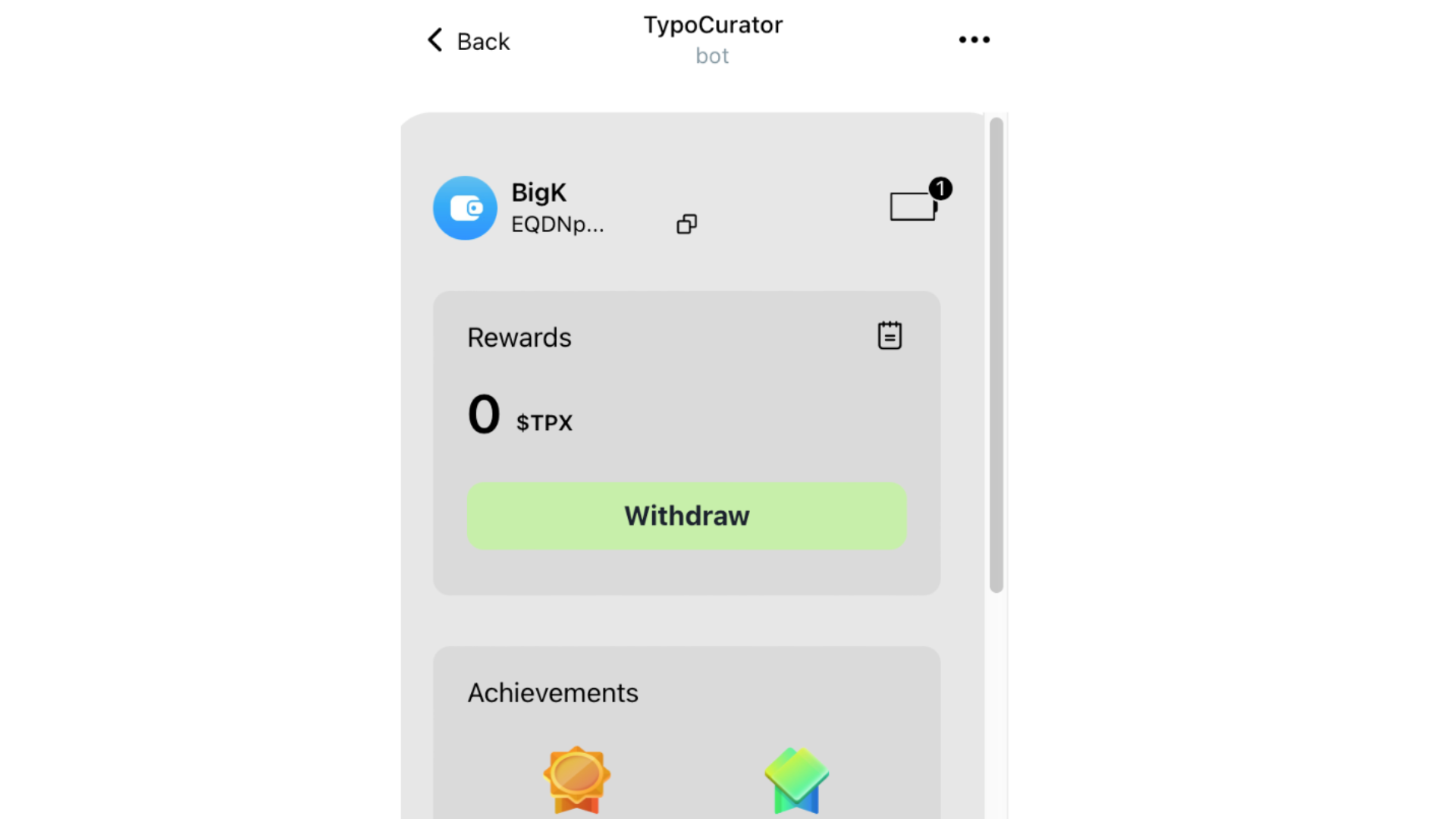

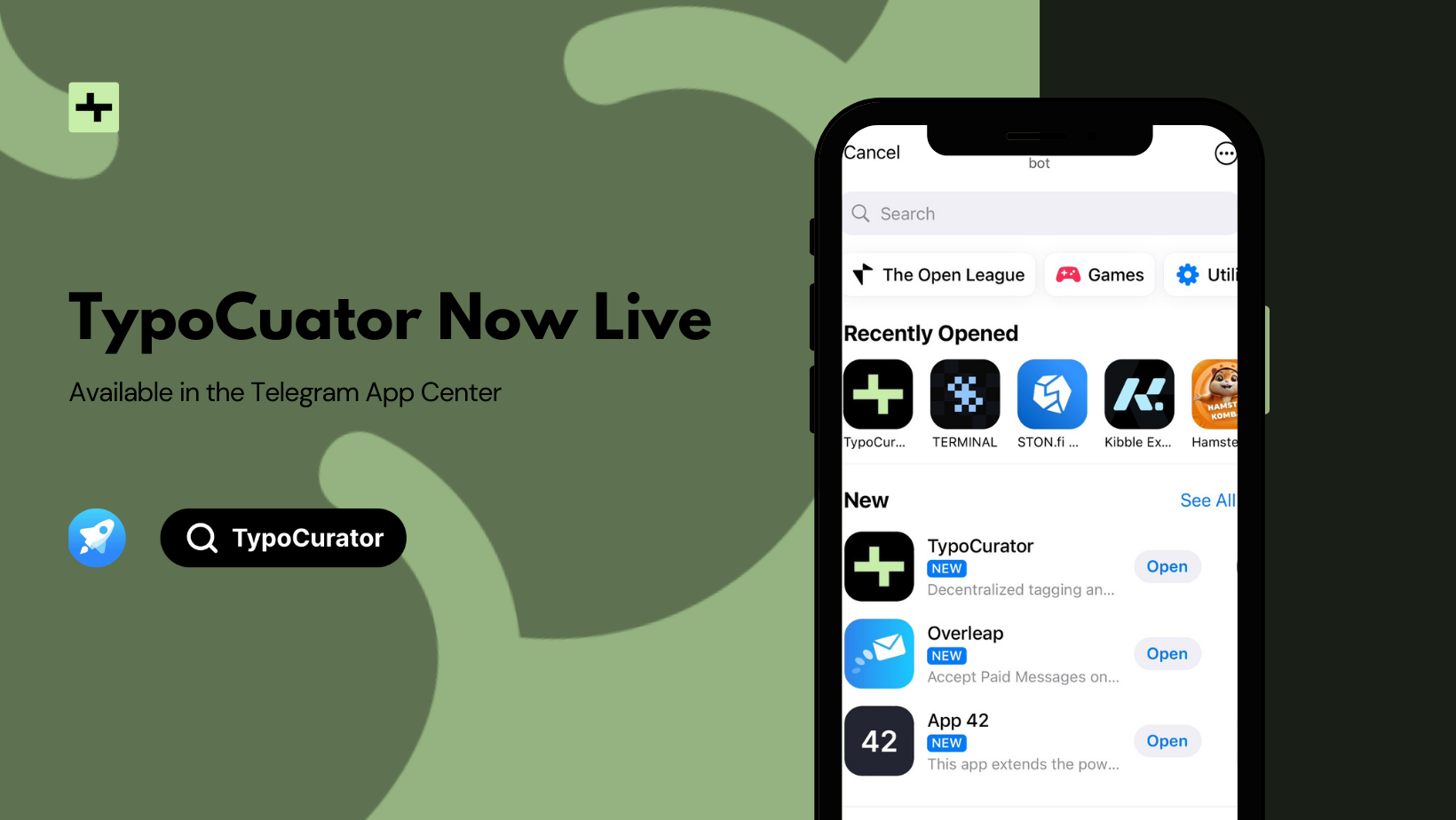

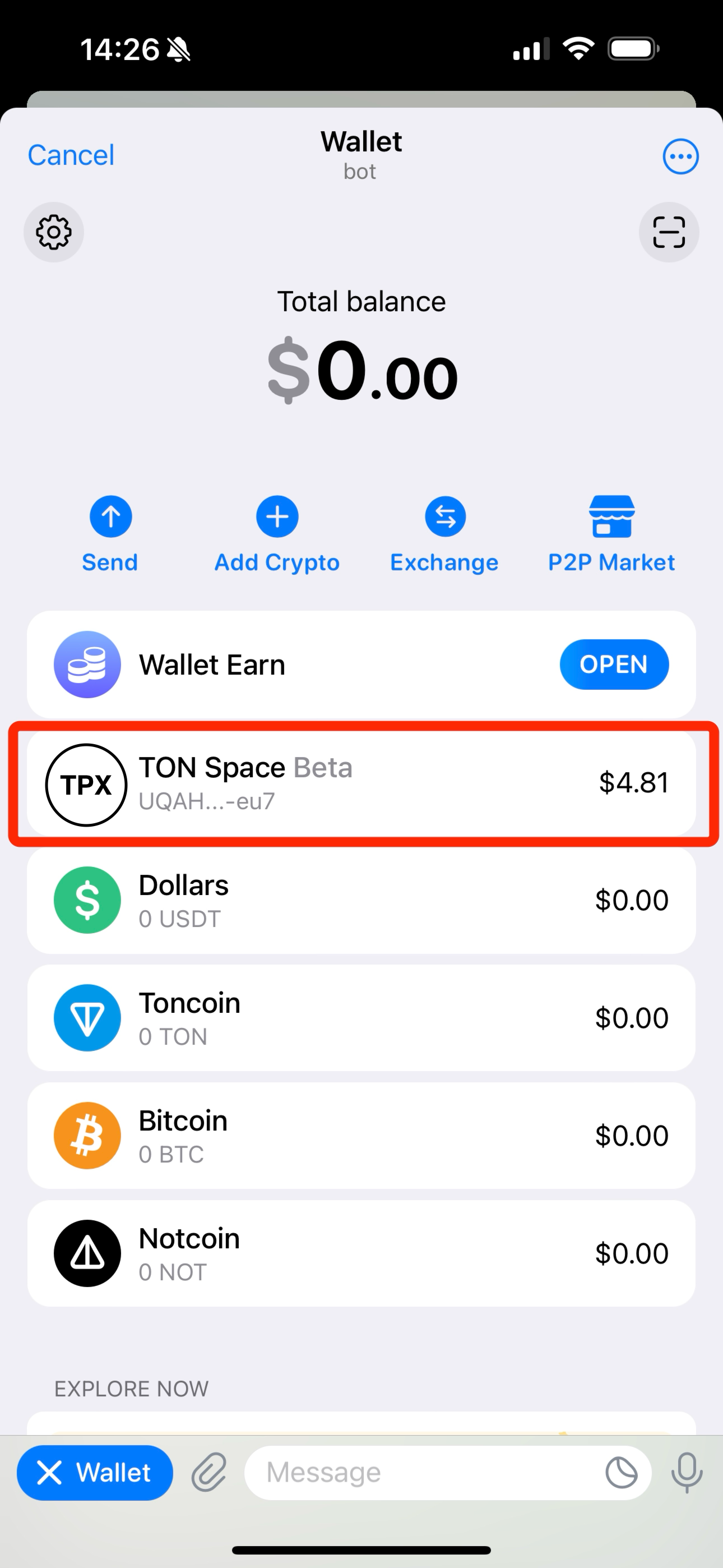

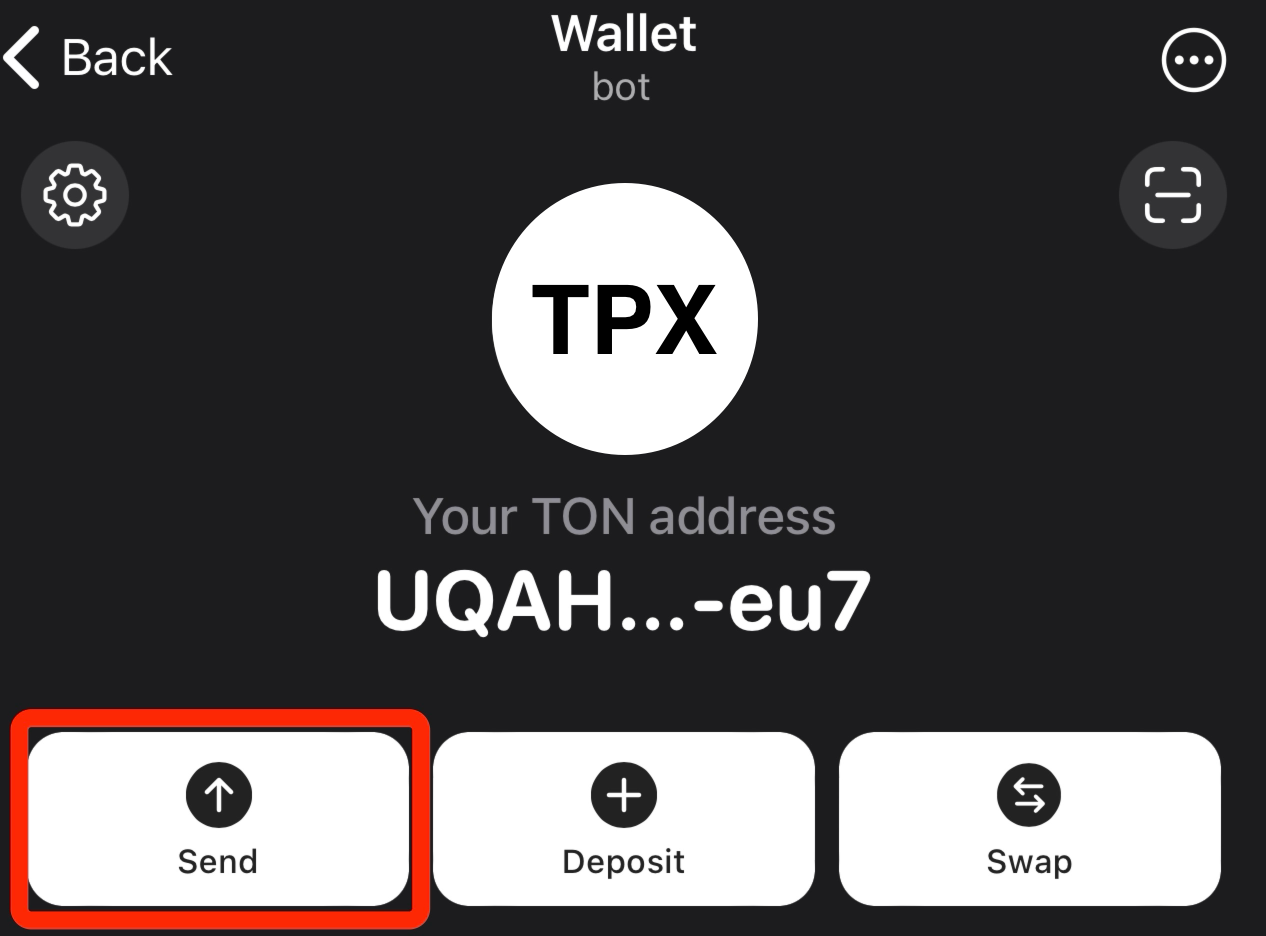

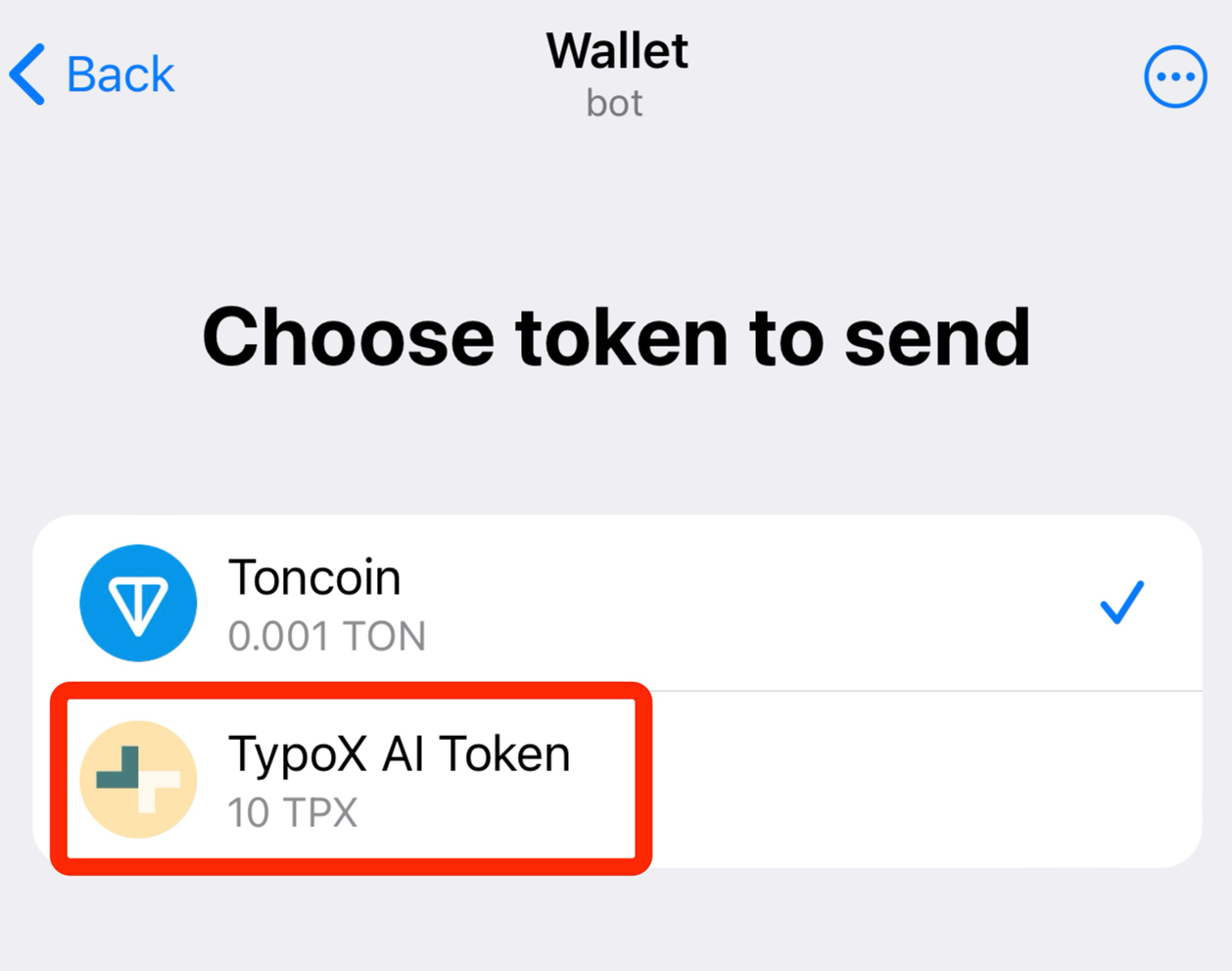

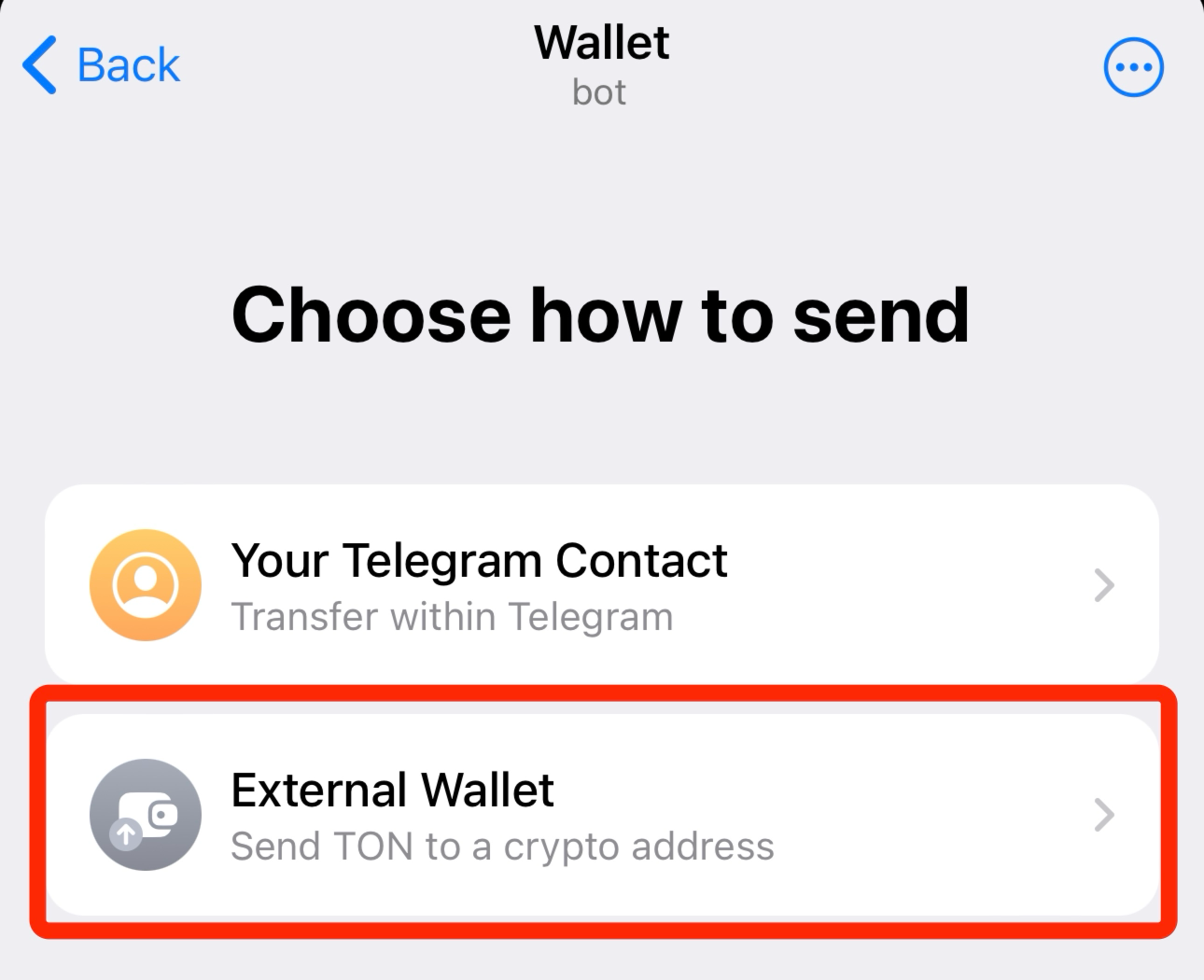

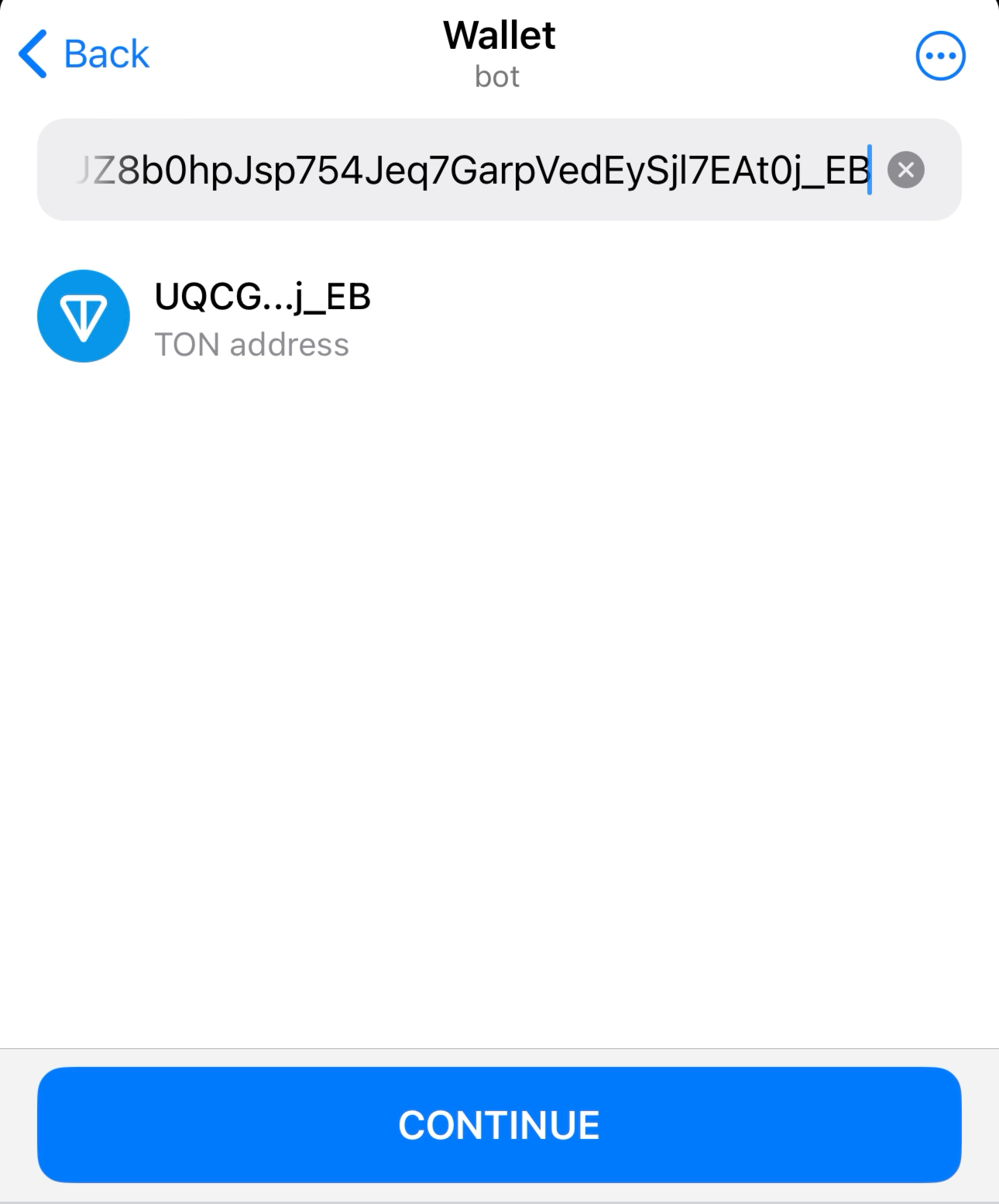

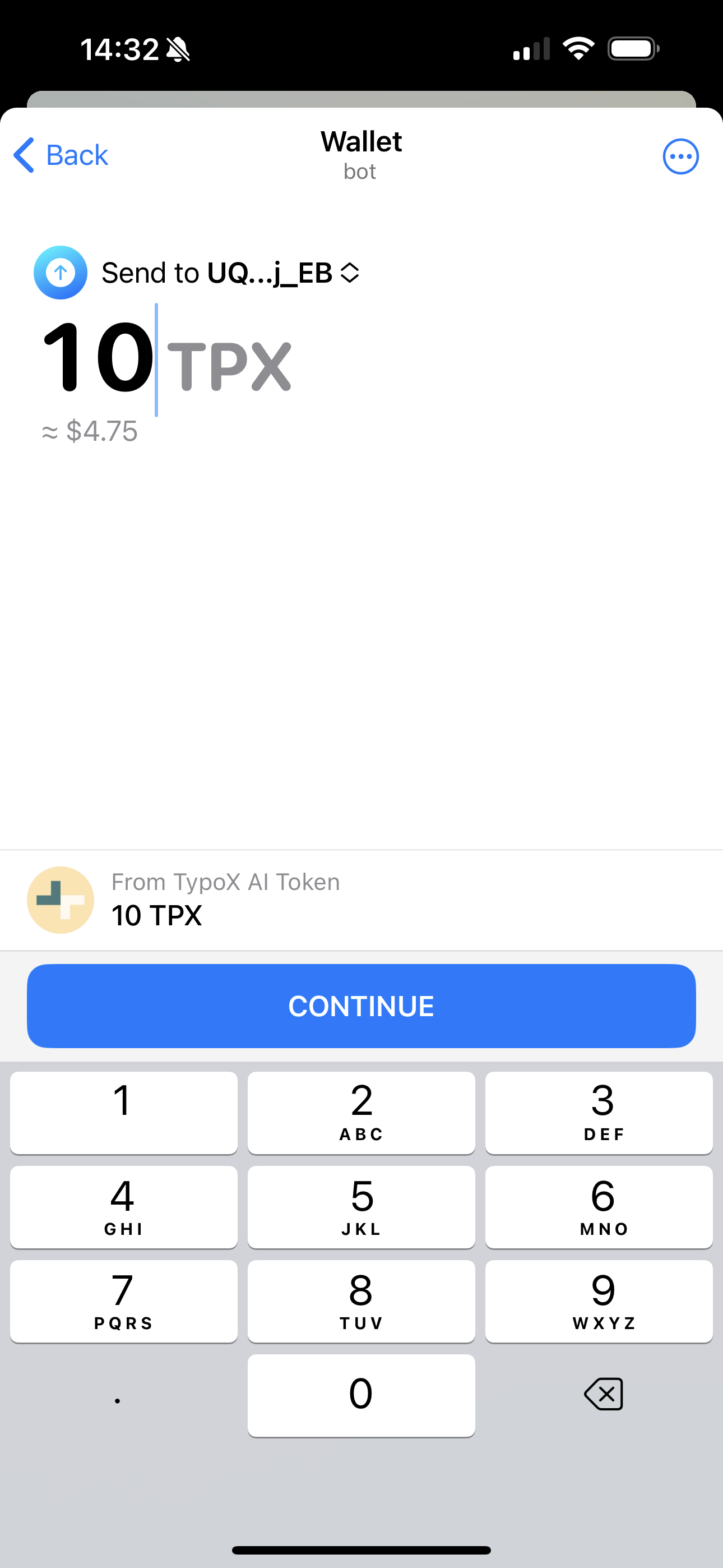

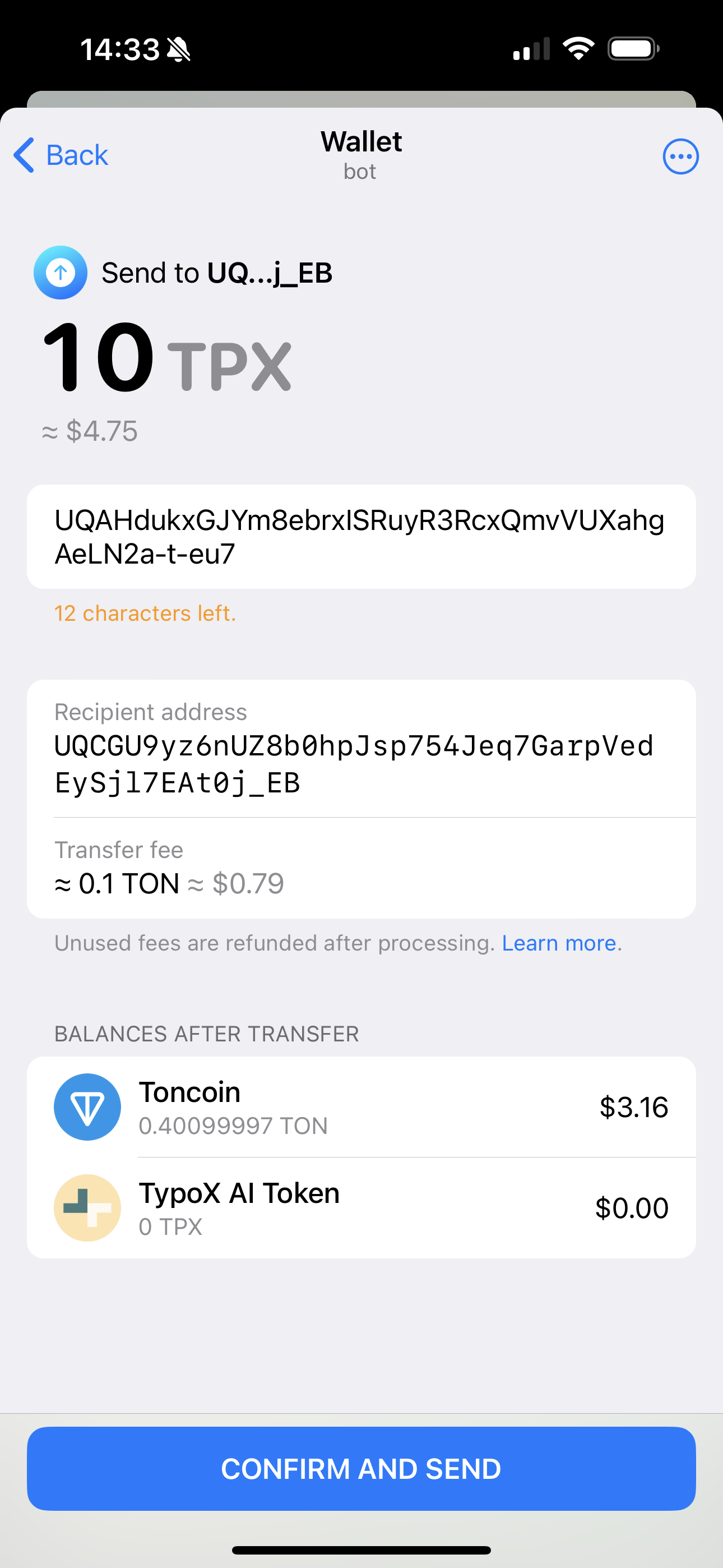

Exciting News! TypoX AI’s S1 Airdrop is Now Live!

Exciting News! TypoX AI’s S1 Airdrop is Now Live!