Casual writing of try Integrating CrewAI with Gemini

-

Our company has recently replaced GPT-3.5 with Gemini-Flash entirely. Gemini is Google's LLM, and the Gemini-Pro version has been available for over a year. While Gemini-Pro is comparable to OpenAI's GPT-4, its performance is noticeably inferior to GPT-4, not to mention OpenAI's latest GPT-4o.

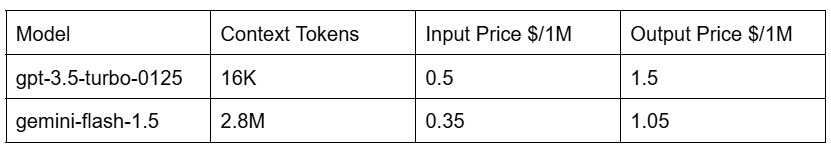

However, the recently launched Gemini-Flash-1.5 which targets GPT-3.5, surpasses GPT-3.5 significantly. It not only has a higher context token limit of 2.8 million (compared to GPT-3.5's maximum of 16K) but is also much cheaper. The table below compares the data of GPT-3.5-turbo-0125 and Gemini-Flash-1.5.

However, the Gemini ecosystem is still not as developed as GPT. For example, CrewAI currently does not have a ready-made method to connect with Gemini. According to the CrewAI Assistant created by the official CrewAI team, you can connect to Gemini using a custom tool. However, a custom tool cannot fully replace the need for GPT because the tool is only used when entering an Agent. Before entering an Agent, the default LLM is still required to handle certain tasks.

The good news is Langchain now supports connecting to Gemini. It’s only need to install langchain-google-genai:pip install langchain-google-genaiTherefore, I will next attempt to see if CrewAI can fully replace the default LLM with Gemini by leveraging Langchain.